- October 29, 2025

Federal Surveillance Expansion Raises Alarms: Millions Spent on AI-Powered Social Media Monitoring

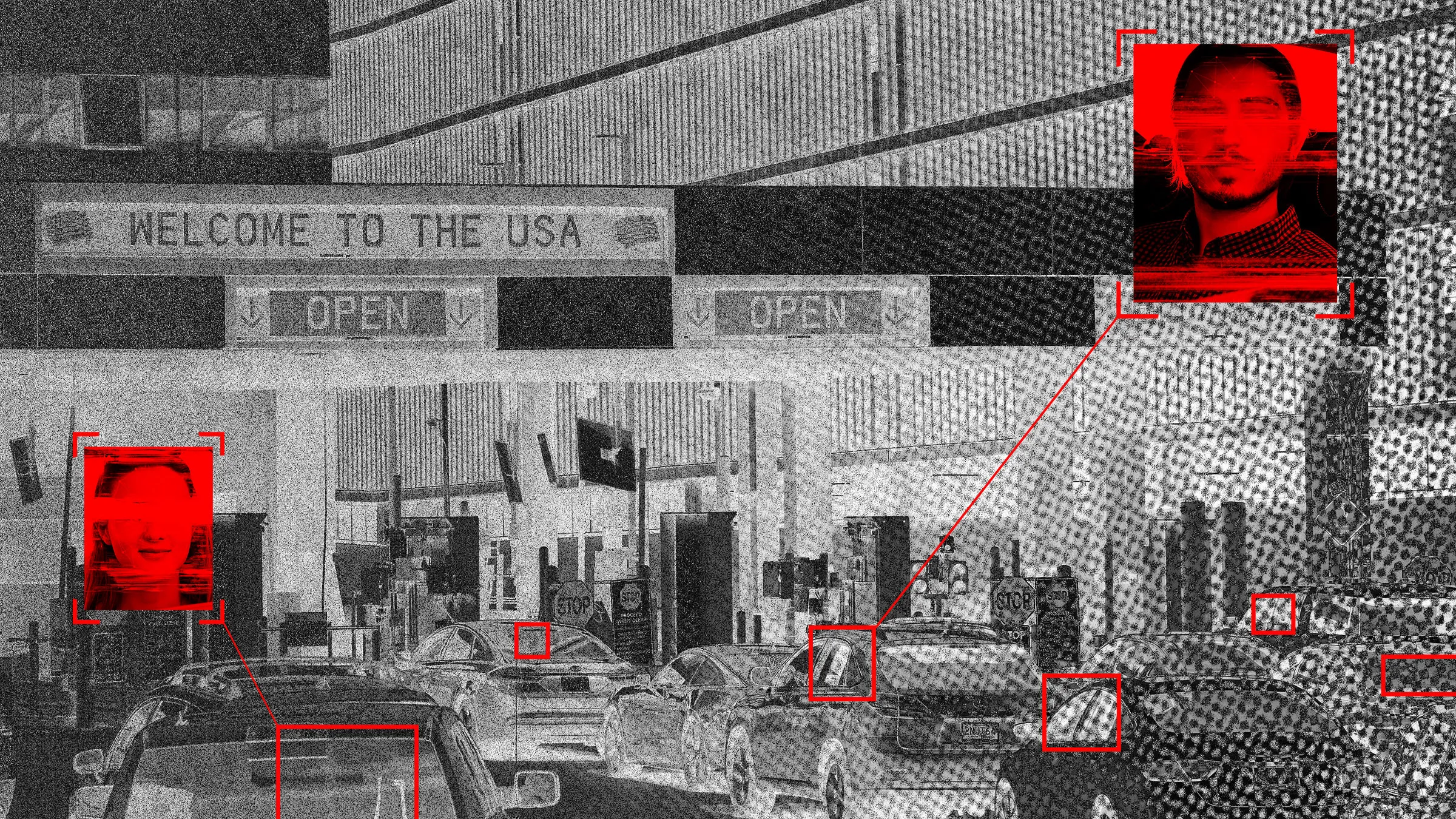

In a move drawing sharp criticism from civil liberties advocates, U.S. Immigration and Customs Enforcement (ICE) has spent millions of dollars on a new artificial intelligence–powered social media monitoring system. The program, recently revealed by a Truthout investigation, underscores the federal government’s growing use of digital surveillance technology to track online behavior—including that of immigrants and activists.

According to federal contracting records, ICE signed a five-year, $5.7 million deal with Carahsoft Technology for access to Zignal Labs, a platform capable of analyzing over eight billion social media posts daily. The system uses artificial intelligence and machine learning to detect trends, keywords, and conversations across multiple platforms in real time.

Zignal Labs’ software has also been linked to use by the U.S. Department of Defense and the Israeli Defense Forces, where it has reportedly been marketed as a “tactical intelligence” tool capable of supporting ground operations.

Civil Liberties Concerns

Immigration rights groups and privacy watchdogs warn that ICE’s growing reliance on automated monitoring could lead to widespread surveillance of individuals based solely on their social media activity.

This kind of AI surveillance has a chilling effect on free speech. When people know that their tweets, posts, or videos might be flagged by an algorithm and reviewed by a federal agency, it changes how they participate in public discourse.

Advocates fear the technology could disproportionately target immigrant and multilingual communities, where cultural expressions or language differences could be misinterpreted as threats or “suspicious” behavior.

Lack of Oversight and Transparency

The Truthout report also highlights how little is known about how ICE will use this data or what safeguards exist. Federal documents provide limited information on how long user data will be stored, how it will be shared, or whether bias testing has been performed on the AI systems used.

Critics argue that the lack of transparency in contracts of this nature makes it difficult for the public to understand how their online presence is being monitored. “Without public oversight, there’s a real danger of these tools being misused,” said a policy analyst with a civil rights organization.

Broader Implications

The purchase fits a larger federal trend toward integrating artificial intelligence into law enforcement. Over the past decade, multiple agencies—including the Department of Homeland Security and the FBI—have expanded their use of predictive policing and social-media-based intelligence systems.

For communities with large immigrant populations, such as those across South Texas and the Rio Grande Valley, the implications are significant. Local leaders and advocacy groups are urging transparency and education on digital privacy to ensure residents understand how their data might be monitored and used.

Social media is a vital platform for civic engagement, organizing, and community building. We cannot allow fear of surveillance to silence those voices.

Calls for Accountability

Civil rights groups are now calling on Congress to impose stricter oversight on government use of AI surveillance tools and to protect individuals’ rights to free expression.

Until such measures are enacted, experts warn that the growing intersection between artificial intelligence and law enforcement could reshape the boundaries of privacy and public participation in the digital age.